A lot of similar context setting is now visible even in how Amazon’s Alexa works in India, where we have a different way of expressing things in English. Then when I say ‘Nicole Kidman,’ it starts looking for content where both the stars are featured instead of switching to just Kidman movies. For instance, using the right metadata along with machine learning powered by artificial intelligence, the box understands that when I say ‘Tom Cruise,’ it looks for content related to the Hollywood star across all sources. TiVo’s Experience 4 software, which the company is pitching to partners in India, can understand the meaning and context of the command. But it is no longer about just recognising voice and executing the command. TiVo’s senior director for international marketing Charles Dawes showed me how their box could now understand voice. Last week, I also happened to see a demo of US entertainment technology company TiVo’s latest offerings. Anyway, for me, this is a clear sign that artificial intelligence can actually help do stuff more efficiently. At the moment, stability of your internet connection during the process and the amount of ambient noise during the recording seem to be playing a role in the accuracy of the transcription. Of course, neither of the apps are perfect.īut they can do around 80% of your work, which is good enough.

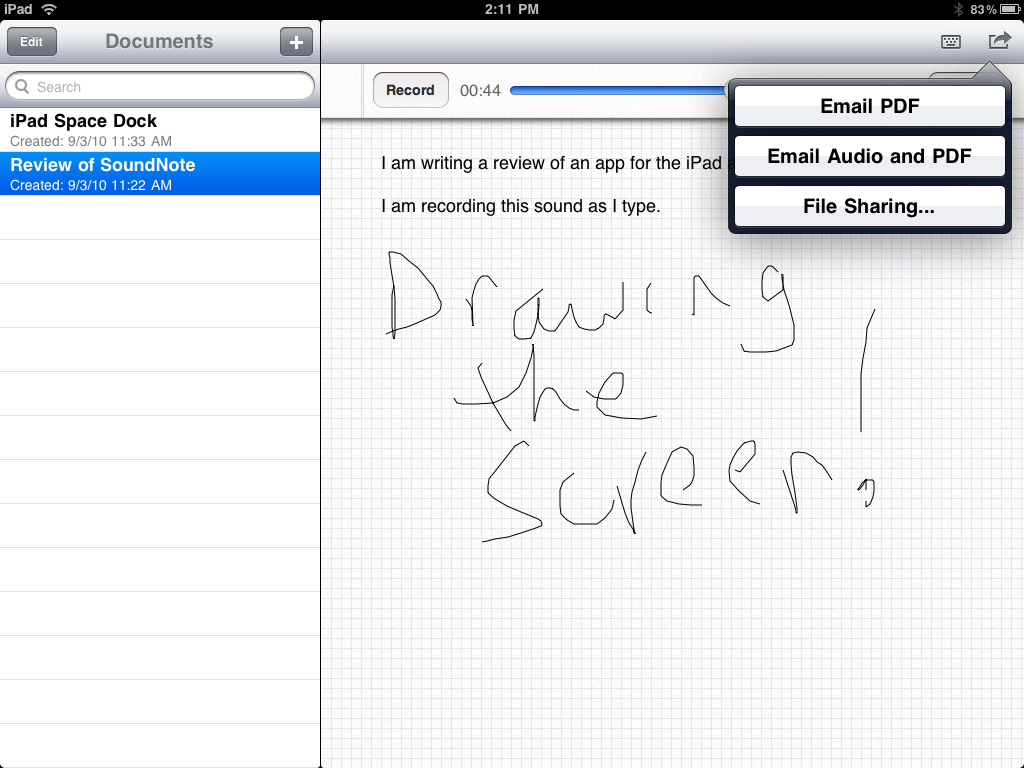

This app records meetings, or other conversations, and transcribes what was said, also understanding and tagging different voices in the process. The other app, Otter Voice Meeting Notes, was suggested by a journalist friend. Yes, you need to make a call via the app, but it will give you a text of what transpired within a few minutes of the call. The first is an app called Tetra, which lets you transcribe telephone calls. So, I was pleasantly surprised over the past couple of weeks when I discovered two apps that make use of the latest technologies to help with voice recognition and transcription. Even as voice recognition technologies got better and better, this seemed like one area where I could still use some help. These worked in bits and pieces, but there was never a permanent solution. I would switch on the recordings and see if the ‘dictate’ option on Google Docs or Apple Notes would be able to write it down for me. Over the years, I tried a lot of ways to get technology to help me solve this issue. This meant there was more transcription staring at me. While this helped me organise my notes, it also pushed me into the habit of jotting down just keywords during interviews. The beauty of this simple app was that the notes were linked to the audio, and as I clicked on a line, it would show me what was being said at that exact point. A few years ago, I discovered a wonderful paid app called SoundNote that let me record the audio and take notes along with it. I have always wanted technology to be able to help me there. But between the meeting and writing is a lot of transcription of long interviews. I’d consider that notable.I do interview a lot of people who I end up writing about. Moreover, such tablets remain expensive, niche products, ones I’m sure most college and high-school students cannot afford and do not have. I can imagine wanting a keyboard to take down sufficient notes in a detailed class or lecture, as opposed to an interview I plan on splicing up anyway. But all three have much to recommend them and offer a vast leap over traditional methods of note taking-at least for those of us with woeful handwriting and the need to get down lots of quotes.īut all these newfangled apps do raise a question: Is your iPad, smartphone, or tablet really an ideal place to be taking notes, anyway? I, at least, need to hunt and peck with two fingers to type on my iPad, slowing me down considerably. And Notability never did send me my files with the audio attached for some reason. SoundNote never crashed, and never erred in sending my notes to me. It does seem that the bells and whistles tend to make Notability and AudioNote less stable, at least on my iPad 2.

0 kommentar(er)

0 kommentar(er)